Do You Need to Be Aware of ISO/IEC TR 29119-11 on Testing AI/ML Software?

At the end of 2020, ISO published the technical report ISO/IEC TR 29119-11. Its title is “Guidelines on the testing of AI-based systems”.

A lot of companies want to know how to test their devices based on artificial intelligence (AI) techniques according to the state of the art. That’s why ISO/IEC TR 29119-11 has set out to provide concrete guidance on how to do this and thus to describe the state of the art. But does the standard achieve this aim?

If you can’t wait for the answer, go straight to section 3 “Summary”.

1. Overview of ISO/IEC 29119-11

a) Scope of application

ISO/IEC 29119-11 is part of a series of standards on software testing. For example, part 2 describes the test processes, and part 4 the test techniques. Part 11, which we’re going to look at here, is intended to provide guidance for testing AI-based software, irrespective of the

- Sector

- Type of device (standalone software, physical device)

- Number of artificial intelligence components

b) Structure of the standard

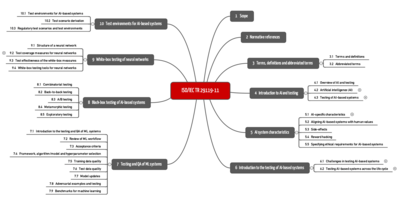

ISO 29119-11 is 60 pages long and consists of 10 sections and an annex.

2. The most important contents

Section 1: Scope

Although the title of the first section is “Scope”, it spends more time describing the goals and contents of the standard.

Section 2: Normative references

The standard does not mention any normative references.

Section 3: Terms, definitions and abbreviations

The third section contains 88 definitions. That’s a lot. But there is largely no reference to sources. And some definitions are surprising to say the least. For example, experts in machine learning understand the term “false positive” somewhat differently to the authors, who define the term from the software tester’s point of view:

“incorrect reporting of a pass when in reality it is a failure”

ISO/IEC TR 29119-11 Section 2

Section 4: Introduction to AI and testing

The very high-level introduction to section four introduces, among other things, use cases, types of AI models, AI frameworks such as Tensorflow, and regulatory standards. Opinions may be divided on the timeliness, content and selection of these lists.

There are almost no regulations relevant to medical devices.

Additional information

Read more on the regulatory requirements for the use of ML in medical devices.

Section 5: AI characteristics

The authors introduce the quality model described in ISO 25010 and come to the surprising conclusion:

However, AI-based systems have some unique characteristics that are not contained with this quality model, such as flexibility, adaptability, autonomy, evolution, bias, transparency/interpretability/explainability, complexity and non-determinism.

ISO/IEC TR 29119-11 Section 4

ISO/IEC TR 29119-11 even calls these attributes “non-functional characteristics.”

The assessment “not contained within this quality model” is hard to understand for several reasons:

- The list in section 4 includes several concepts, such as quality properties (e.g., adaptability) and error types (bias). The absence of errors, in this case the correctness of values, is an explicit part of the quality model (functional correctness).

- Other quality aspects are also very much included in ISO 25010’s quality model. For example, interpretability is an aspect of maintainability, particularly analyzability.

- The terms in the list refer to the model and the product (autonomy). The two should not be confused. Whether devices are autonomous or not does not depend on the AI.

- It remains unclear how the authors have arrived at the conclusion that the output of AI models is non-deterministic.

Section 6: Introduction to the testing of AI-Based systems

The authors of ISO/IEC 29119-11 see the creation of specifications as particularly challenging. For example, the desired system output is often not known, which is also a problem when creating the test oracle.

A medical device manufacturer who tried to claim anything like this would be likely to run into problems with their notified body. The manufacturer would have to be able to specify precisely for test data whether the system should, for example, be capable of detecting cancerous tissue on a specific CT image or not. The manufacturer has to specify this during labeling.

ISO 29119-11 considers the concepts of unit (German), integration and system tests (German)to be transferable to software that contains AI components. That is understandable. The standard does not give any specific instructions for how to perform these tests; it refers to the later sections.

With regard to unit testing, ISO 29119-11 does not distinguish between

- Software that is part of the medical device and

- Software that is used for data processing

From a software engineering perspective, this makes sense. From a regulatory perspective, however, the two cases have to be differentiated. ISO 13485 and IEC 62304 mean that different standards are actually applicable.

Tip!

In this context, pay attention to the regulatory requirements of ISO 13485 on computerized systems validation.

Section 7: Testing and QA of ML systems

The seventh section, unlike the other sections, only talks about machine learning and not artificial intelligence. The standard does not reveal the reasons for this switch.

Anyone hoping to find concrete guidance on how to test ML systems in this section will be disappointed. The authors devote two or three relatively general sentences and a sub-section each to various aspects such as “test data quality.”

To use one example, instead of guidance, the subsection on adversarial attacks contains a description of these attacks but no information on how you can test the robustness (ISO 25010 criterion) of a system against these attacks.

Section 8: Black-box testing of AI-based systems

Section 8 is also fairly superficial. For example, the sub-section on combinatorial testing describes in a few sentences what this involves. However, ISO 29119-11 does not give any details on what specifically needs to be done and how or whether combinatorial testing can be used for image data.

Additional information

You can find a description of combinatorial testing here (German)

Some information on the following can be found in other subsections:

- Back-to-back testing

Manufacturers can/should, for example, establish the expected results (test oracles) using alternative implementations. In the medical device sector, not knowing these results would be hard to explain (see above). The alternative implementations can also help find the best performing model and justify the selection of this precise model. - Metamorphic testing

Metamorphic testing is intended to help generate test cases. To do this, the test looks at how changes to the test input affect the test output. As examples, the standard cites a model that estimates the age of people based on the lifestyle habits. “Metamorphic testing” would look at the influence of nicotine intake with all other inputs being equal.

Lastly, the authors use it describe partial dependence plots.

Section 9: White-box testing of neural networks

This section is indeed called “White-box testing of neural networks” and not “White-box testing of AI based systems.” It’s not clear why the authors restrict white-box testing to neural networks only.

This section briefly explains what neural networks, neurons, weights and hidden layers are. It then focuses on test coverage. It introduces several metrics:

- Neuron coverage

- Threshold coverage

- Sign change coverage

- Value change coverage

- Sign-sign coverage

- Layer coverage

ISO 29119-11 dedicates a couple of sentences to each one. However, the standard does not explain what validity these metrics have, either based on the input (image, table data, texts) or on the architecture of the neural network. This would have been helpful, especially since a lot of developers don’t rely only on fully-connected layers.

The authors do not address the role of activation functions (German) either. That a neuron is defined as activated when its output is greater than zero may be true for a RELU function, but this approach will fail with a sigmoid function.

References to corresponding literature are also largely missing.

Section 10: Test environments for AI-based systems

The last section discusses test environments. It gives some advantages of virtual test environments.

One suggestion is helpful: the test environments should be based on the specific problems (accident reports, issues) not just the system requirements.

However, for medical devices, it is not primarily post-market data that should determine test environment selection but risk management.

The standard does not describe how manufacturers should draw conclusions about the test environment from, for example, accident reports.

3. Overall evaluation of ISO/IEC TR 29119-11

ISO IEC TR 29119-11 is – as its name already indicates – a technical report. It is common for technical reports to provide background information as well as specific requirements.

a) Non-specific and missing guidance

For a technical report, ISO/IEC TR 29119-11 stays at a very superficial level. The standard can act as an introduction even if a lot of it is explained more precisely, in more detail and more comprehensibly elsewhere.

Because ISO/IEC TR 29119-11 only touches on all the concepts, readers are not told what they can actually do. For example, the standard states that the quality of ML systems depends on the quality of the test and training data. This should come as no surprise to anyone. The standard requires:

The selection of training data in terms of the size of the dataset and characteristics such as bias, transparency and completeness should be documented and justified and confirmed by experts where the level of risk associated with the system warrants it (e.g., for critical systems).

ISO/IEC TR 29119-11 Section 7.5

What specifically should manufacturers do now?

Some sentences are almost tautologous. For example:

a system can be tested for bias by the use of independent testing using bias-free testing sets

ISO/IEC TR 29119-11 Section 6.1.8

b) Incomplete

No standard can claim to be exhaustive. The extent to which ISO 29119-1 leaves out existing knowledge is nevertheless astonishing. For example:

- Definitions of concepts such as explainability, interpretability and transparency ignore existing definitions.

- White-box testing approaches for AI techniques refer to neural networks only.

- Relevant metrics for determining coverage levels, e.g., input and output data space coverage, are ignored.

- There is no reference to the state of the art for model-specific and model-agnostic methods for interpreting and testing ML models (as described, for example, in Christoph Molnar’s book).

- Techniques that are very widespread, such as the ensemble trees group are ignored (the standard addresses the specifics of neural networks).

- The validation of machine learning libraries is very demanding. The fact that the standard does not address this is regrettable, since in a lot of devices, this is where most of the code is.

c) Not always comprehensible structure

Both the “macroscopic level” of the standard (e.g., section structure) and its “microscopic level” (individual sentences and bulleted lists, use of terms) raise doubts about the standard’s conceptual integrity.

- The sections do not meet the requirements of the MECE principle.

- Terms are confused, e.g., hazards and safety or feature selection and feature engineering are used synonymously.

- The text switches between AI and ML in a way that is not always easy to follow.

- Different concepts seem to be jumbled together (e.g., reinforcement learning is included in the list of techniques that also includes neural networks and decision trees).

d) Relevance

How relevant the predictions on the use AI given to two decimal places and dating from 2018 will be in a few years’ time is open to debate.

The same is true for the coverage levels selected and the focus on neural networks.

A lot of implicit restrictions (e.g., to some of the activation functions) further narrow the actual scope of the standard.

4. Conclusion

People who compile knowledge, structure it, and shape it into a standard deserve our recognition and thanks. This work is mostly done on a voluntary basis.

ISO 29119-11 looks like it was written by people with in-depth knowledge of software engineering. It doesn’t exude expertise in machine learning to the same degree.

To charge CHF 178.00 for a standard of this quality doesn’t seem reasonable.

For medical device manufacturers and auditors, it should be clear:

Tip

Die ISO/IEC TR 29119-11 does NOT describe the state of the art. Therefore, it should NOT be requested during audits.

Whether you want to spend your money buying the standard and then take the time to read it or would rather invest both in testing your AI-based systems is an individual decision.

In a future article, the Johner Institute will provide some concrete guidance on testing ML-based software. It has already published a comprehensive guide in the form of its free AI guidelines that are also used, in modified form, by notified bodies and will be used as the basis for a future WHO guideline.